At the moment, self-driving cars still have a long way to go before they can be approved for road use. At some point, however, these vehicles should be part of the everyday street scene. An indispensable accessory here are the sensors – the “eyes” – without which the cars could not find their way. But this raises a question: Does vision decline not only in humans as they age, but also in these autonomous cars? And how can you tell if a car cannot “see” so well anymore or might even have gone completely “blind” and require new sensors? This should happen before it becomes obvious through an accident. A researcher at the Swiss Federal Laboratories for Materials Testing and Research Empa is investigating this question.

While tests with autonomous cars are already well advanced in the U.S., they are still in their infancy in Europe. Recently, for example, a test vehicle drove for 75 minutes through San Francisco and mastered all situations with very little intervention from programmers. And Tesla has also been testing autonomous driving since last year with a few beta testers.

A backyard test site

This is still a long way off at Empa in Dübendorf, Switzerland. Here, a five-meter Lexus RX-450h is making its rounds on a course just 180 meters long in a secluded backyard of the Empa campus. “We are investigating how these sensors work in different environmental conditions, what data they collect and when they make mistakes or even fail,” says Miriam Elser. She works in Empa’s Vehicle Propulsion Systems Laboratory and is leading the project. “Every human driver has to pass an eye test before getting a driver’s license. Professional drivers have to repeat this test regularly. We want to develop an eye test for autonomous vehicles so that they can be trusted even when they are several years old and have thousands of miles under their belt.”

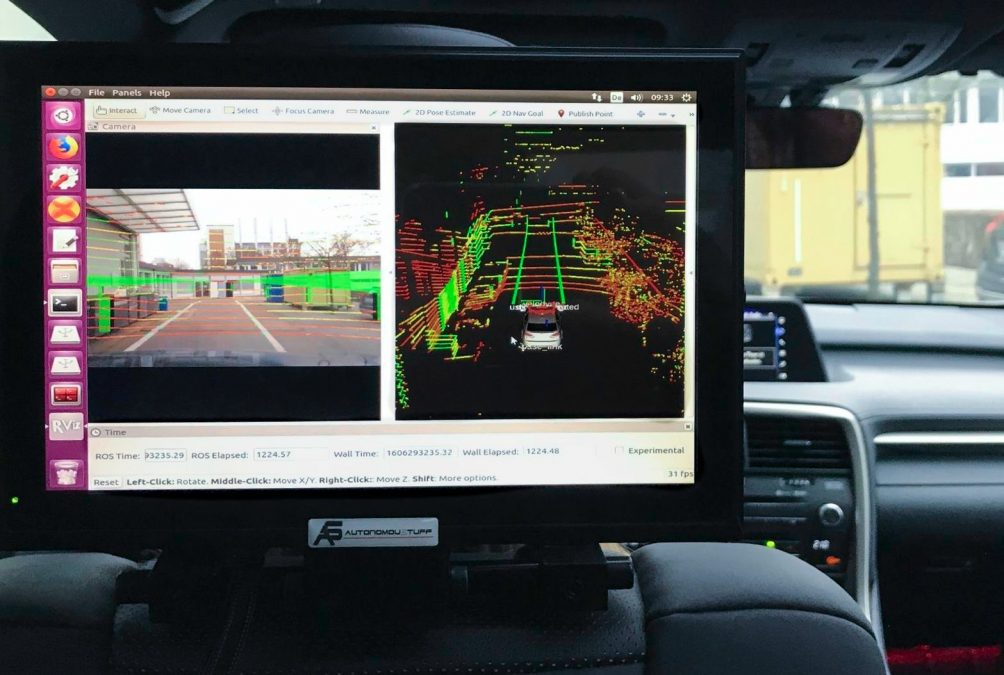

So far little attention has been paid to this topic and only about 20 out of about 1,000 research papers on autonomous driving in the past five years have dealt with the quality of sensor data. Empa is now looking specifically at this aspect. For this purpose, the Mobileye camera behind the windshield sees freshly painted road markings, the Velodyne lidar scans the window front of the same laboratory building at every turn and the Delphi radar behind the radiator grille of the Lexus measures the distance to five tin waste bins set up to the left and right of the course. The data is processed in a “black box.” This know-how is worth a lot of money and is carefully guarded by Google, Apple, Tesla, Cruise LLC and the other major manufacturers researching autonomous vehicles, the Swiss emphasize. None of them would let anyone peek behind the scenes.

“Assessing the vision and judgment” of autonomous driving cars

But sensor quality plays an important role for road approval of autonomous cars. For the planned field tests in real road traffic, one of the questions now being asked is at what point does it become dangerous or where do sensors fail completely and make such serious errors that the test must be aborted or modified. For such field tests alone, it must be possible to quickly and accurately assess the “vision and judgment” of autonomously driving cars, say the researchers.

To this end, Empa is investigating commercially available sensors in practical use while the Swiss Federal Institute of Metrology (METAS) is studying the same sensors in a laboratory environment. Also involved in the research project is ETH Zurich’s Institute of Dynamic Systems and Control, which is looking at the next generation of vehicle sensors.

Cover photo: What does a car see? Laser scan of the Empa test track: the laboratory building on the left, trees on the right, a pedestrian in front of the car. Image: Empa

For more articles on autonomous driving, click here.